Organizations looking to build clinical decision support system solutions are entering a rapidly expanding but highly regulated market.

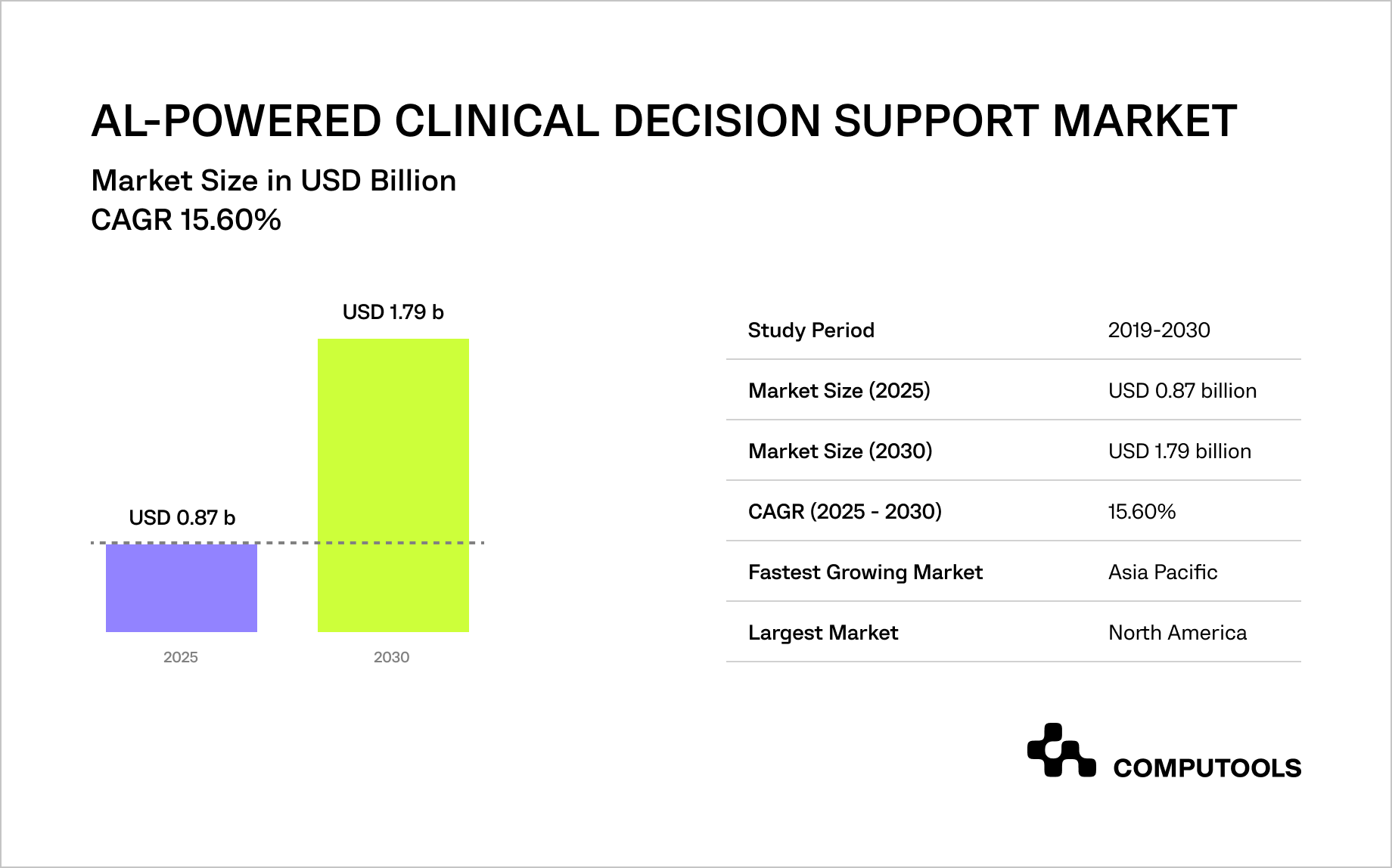

According to Mordor Intelligence, the global AI-powered clinical decision support market is expected to grow from USD 0.87 billion in 2025 to USD 1.79 billion by 2030, with a 15.6% CAGR, driven by rising clinical data volumes, pressure to reduce medical errors, and the need to control healthcare costs.

AI-powered clinical decision support systems are already demonstrating measurable value across primary care, cardiology, oncology, and infectious disease management. Clinical studies indicate that well-designed AI-powered CDSS can support diagnostic accuracy and early risk detection when integrated into real-world clinical workflows.

At the same time, many healthcare organizations struggle to move beyond pilots due to regulatory uncertainty, unclear boundaries between decision support and decision-making, and concerns around data privacy, explainability, and clinical accountability. As AI systems become more embedded in clinical workflows, even small design choices can significantly affect regulatory exposure.

This creates a central tension for healthcare leaders: AI clinical decision support is no longer optional for competitive, high-quality care, but building it without violating regulations, undermining clinician trust, or creating long-term compliance risk requires a deliberate, architecture-first approach.

How we implemented an AI-driven clinical support platform for a healthcare startup

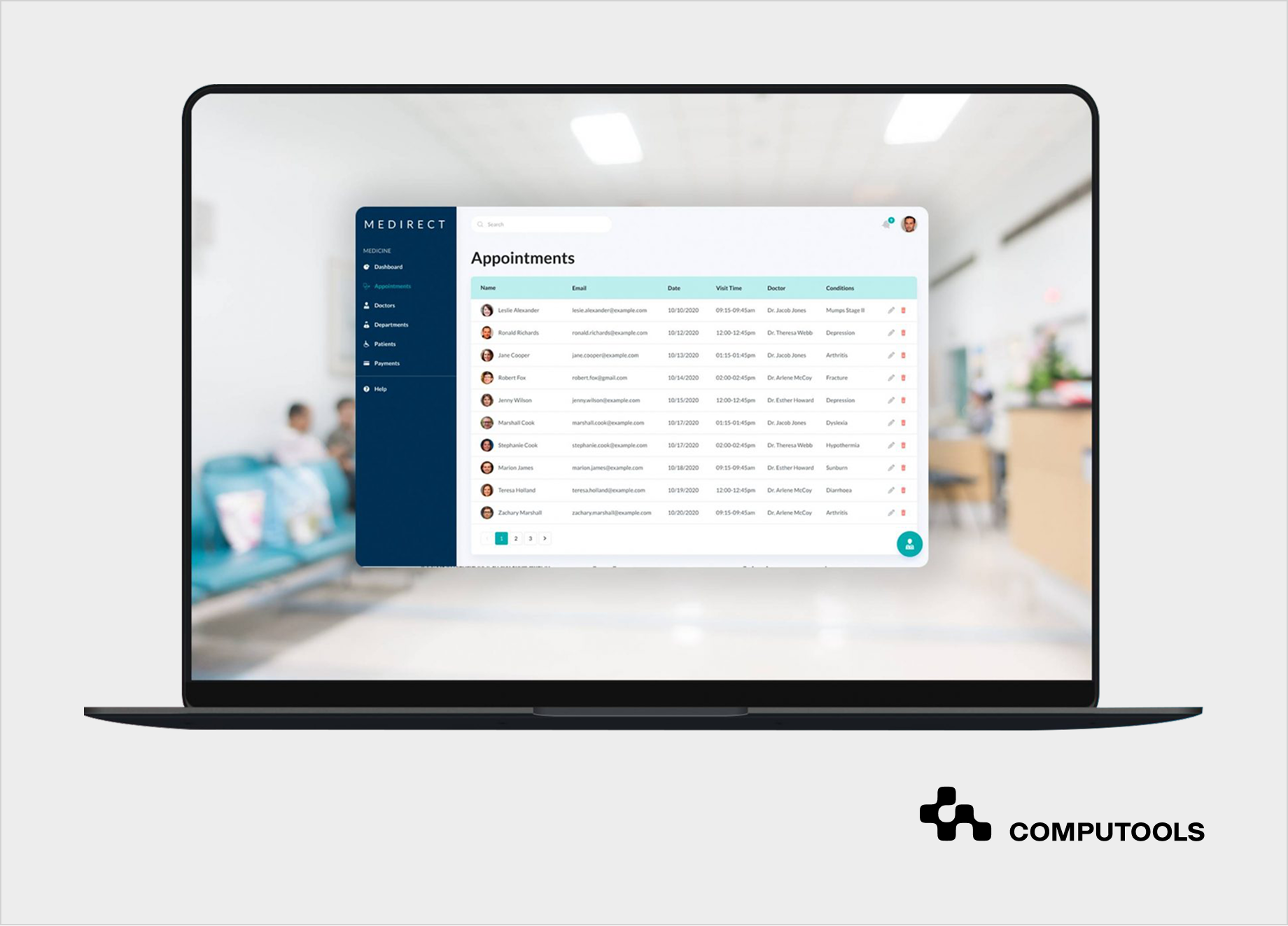

Medirect, a healthcare startup, set out to build a unified digital platform to improve patient management and clinical coordination across healthcare facilities. The core challenge went beyond technology: patient records, prescriptions, and clinical metrics were scattered across disconnected systems, making it difficult for medical staff to assess patient status quickly and consistently. Any solution also had to support differentiated access for clinicians and administrators while remaining safe to deploy in regulated clinical environments.

We designed a centralized clinical support platform that consolidated fragmented medical data and introduced standardized calculation logic to support clinical reasoning. AI components were deliberately positioned as decision-support tools rather than autonomous decision-makers, allowing clinicians to retain full control while benefiting from improved context and early risk indicators.

This design approach ensured alignment with healthcare AI regulations and reduced compliance risk from the earliest stages of development.

The platform was built as part of a broader hospital software development services engagement, focused on reliability, security, and workflow integration.

Advanced analytics and neural-network–based processing were applied selectively to improve data quality and diagnostic context, demonstrating how targeted AI development services can enhance clinical operations without disrupting existing care processes.

Following the launch, Medirect increased its market share by 15% and successfully entered a competitive healthcare solutions segment.

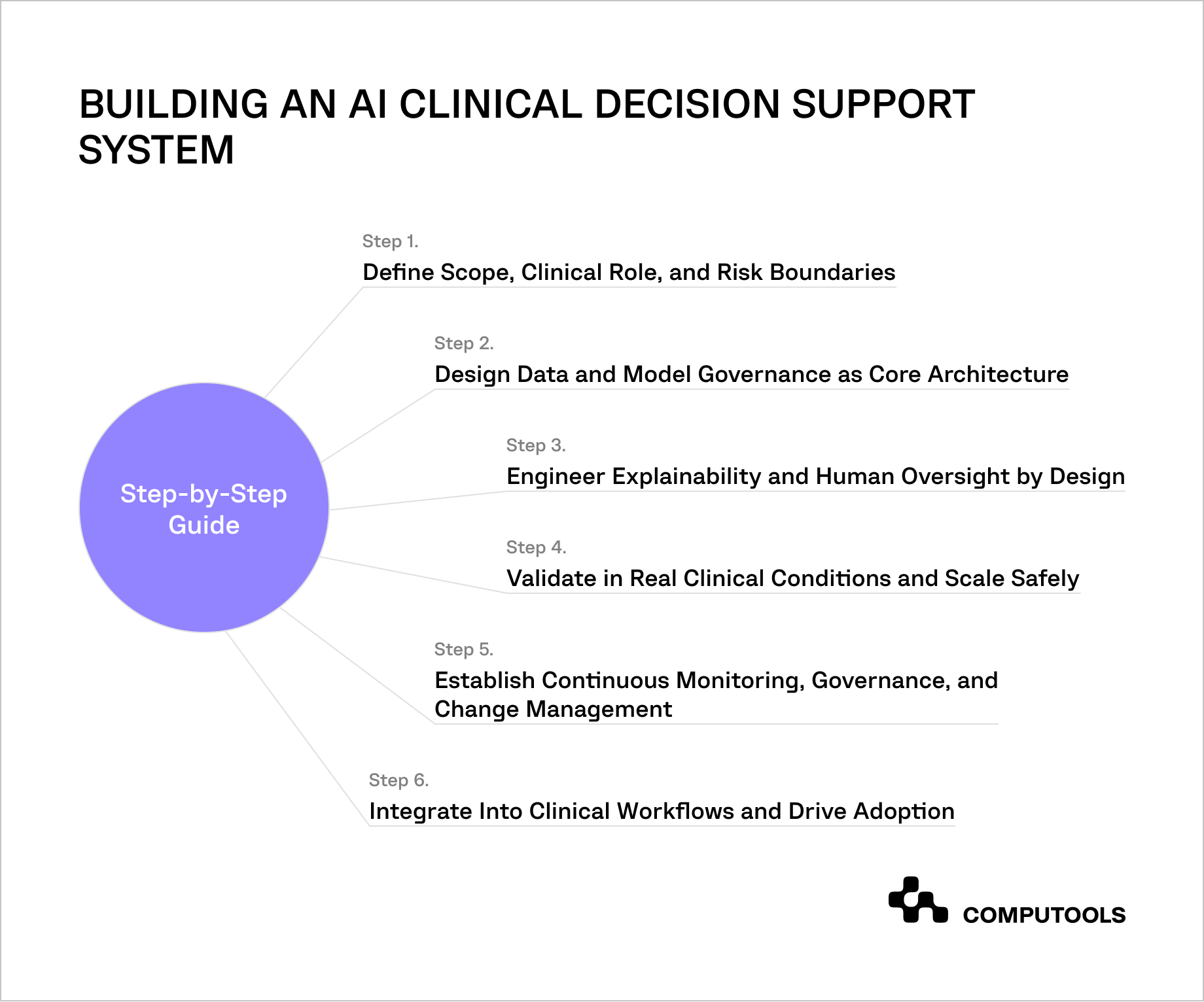

Step-by-step guide: how to build an AI clinical decision support system without violating regulations

The following step-by-step guide presents a practical, architecture-first method for developing AI clinical decision support systems in regulated healthcare settings. It draws on real-world implementation experience and emphasizes decisions that directly impact compliance, clinical responsibility, and long-term operational stability.

Step 1. Define Scope, Clinical Role, and Risk Boundaries

The first step in engineering a compliant medical AI solution is to explicitly define the system’s clinical role and responsibility boundaries. Regulatory risk depends on how the system is designed to be used in clinical workflows. An imprecise scope definition can unintentionally shift a product from decision support into autonomous medical decision-making, triggering significantly higher regulatory obligations.

At this stage, the system must be formally positioned as an AI clinical decision support system, with documented limitations, clear human oversight, and a well-defined intended use.

What must be defined upfront?

1. Clinical role: decision support only, not diagnosis or treatment determination

2. Target users: clinicians (not patients)

3. Decision ownership: final clinical decisions remain with a human professional

4. Output type: contextual insights, risk indicators, guideline references

5. Explicit exclusions: no autonomous diagnosis, no automated treatment selection

Regulatory alignment actions

• Document intended use in internal product and technical documentation

• Perform preliminary regulatory risk classification based on target markets:

– EU: assess potential classification under the EU AI Act and MDR / IVDR

– US: evaluate the applicability of the FDA software as a medical device (SaMD) guidance

• Explicitly confirm that the system remains within clinical decision support boundaries rather than autonomous decision-making

• Ensure scope definition is consistent across system behaviour, UX language, product documentation, and external communications.

In the Medirect project, the system scope was intentionally limited to data consolidation and clinical context support. AI outputs were restricted to structured insights and standardized calculations, while all diagnostic and treatment decisions remained under clinician control. This clear scope definition reduced regulatory ambiguity and simplified subsequent architecture decisions.

Step 2. Design Data and Model Governance as Core Architecture

Medical AI compliance depends on traceability, accountability, and control across the entire data and model lifecycle. Governance must be embedded in the system architecture rather than handled solely through external processes or documentation. Without this foundation, compliance cannot be sustained as models evolve or data sources change.

To meet medical AI software compliance requirements, governance mechanisms must operate continuously and at scale.

Data governance requirements

• Defined data sources with documented provenance

• Use of standardized clinical formats and terminologies where possible

• Validation of data completeness and representativeness

• Bias identification and mitigation strategies

• Controlled access to sensitive data via role-based permissions

Model governance requirements

• Version control for all models and configurations

• Documented change-management procedures

• Audit logs linking model outputs to input data and model versions

• Defined human-in-the-loop checkpoints for clinical review

• Clear rollback and revalidation processes for model updates

For Medirect, disparate clinical metrics were consolidated into a centralized platform with standardized calculation logic and role-based access control. Model behavior and data transformations were traceable, enabling auditability and long-term governance without disrupting clinical workflows.

Step 3. Engineer Explainability and Human Oversight by Design

Explainability and human oversight are mandatory requirements for AI systems operating in clinical environments. Regulatory frameworks require that clinicians understand how outputs are generated, which data elements influenced them, and where uncertainty exists. Without this transparency, AI-supported insights cannot be reliably reviewed, challenged, or audited in real clinical practice.

From an engineering standpoint, explainability and oversight must be built into the core of the system rather than implemented as interface-level additions. In a clinical decision support system AI, these mechanisms directly determine whether the system can be trusted, validated, and safely maintained after deployment.

Explainability requirements

• Clear identification of input data elements contributing to each output

• Visibility into key factors influencing scores, alerts, or prioritisation

• Explicit representation of uncertainty or confidence levels

• Traceable links to reference data, rules, or clinical guidelines

• Consistent explanation of logic across comparable clinical scenarios

Human oversight requirements

• Final clinical decisions remain entirely under human authority

• AI outputs are advisory and clearly labelled as such

• Clinicians can override, ignore, or defer AI-supported suggestions

• Interactions with AI outputs are logged for audit and review

• Defined escalation paths exist for ambiguous or high-risk cases

Interface and workflow constraints

• AI-supported outputs are visually distinct from clinician-entered data

• Explanations are accessible without disrupting clinical workflows

• System language avoids prescriptive or directive phrasing

• Oversight actions require minimal additional cognitive effort

In Medirect, AI components were designed to augment clinical context rather than replace clinical judgment. By restricting AI outputs to analytical insights and standardised calculations, the platform avoided framing recommendations as clinical decisions, ensuring that responsibility and authority remained with medical professionals.

Step 4. Validate in Real Clinical Conditions and Scale Safely

Laboratory benchmarks and offline model accuracy are insufficient for healthcare environments. Clinical AI systems must be validated under real operating conditions to ensure they do not introduce workflow friction, alert fatigue, or unintended clinical risk. This step is critical for transitioning from prototype to production in regulated settings.

In clinical decision support software development, validation must address model performance, clinical usability, integration quality, and real-world safety before any broad rollout.

Validation objectives

• Confirm that AI outputs remain clinically appropriate under real patient variability

• Verify that system behavior aligns with the defined scope and intended use

• Identify workflow disruptions, latency issues, or alert fatigue risks

• Ensure AI outputs are interpretable and actionable in time-sensitive contexts

Recommended validation approach

• Silent mode deployment: AI runs in the background without influencing decisions

• Department-level pilots: initial rollout in controlled units (e.g., ICU, cardiology)

• Dual evaluation: measure both technical metrics and clinician interaction patterns

• Feedback loops: collect structured input from clinicians and operational staff

• Phased rollout: expand usage incrementally after resolving identified issues

Operational checks during pilots

• Frequency and relevance of AI-generated alerts

• Time required for clinicians to interpret AI outputs

• Rate of overrides or ignored recommendations

• Consistency of AI behavior across similar clinical cases

For Medirect, the platform was introduced through phased deployment across selected workflows. This allowed real-world observation of how clinicians interacted with AI-supported insights, enabling refinements before wider adoption. As a result, the system scaled without disrupting clinical routines or increasing cognitive load.

Step 5. Establish Continuous Monitoring, Governance, and Change Management

In regulated healthcare environments, compliance is not a one-time milestone achieved at launch. Clinical AI systems evolve continuously as data distributions shift, clinical practices change, and models are updated. Without formal monitoring and change-management processes, even a well-designed system can drift outside its approved scope or introduce hidden risks over time.

To remain safe and scalable, a compliant clinical decision support system must be governed throughout its entire lifecycle, from post-deployment monitoring to controlled updates and documented revalidation.

Continuous monitoring requirements

• Ongoing tracking of model performance and output stability

• Detection of data drift, concept drift, and unexpected behaviour changes

• Monitoring of alert frequency and clinical relevance over time

• Periodic review of override and dismissal patterns

• Identification and logging of safety-critical incidents

Change-management controls

• Version control for models, configurations, and data pipelines

• Formal impact assessment for any model or data change

• Triggered revalidation when thresholds, features, or training data change

• Clear rollback mechanisms in case of degraded performance

• Synchronisation of technical changes with documentation updates

Governance and accountability

• Defined ownership for clinical safety, data governance, and AI oversight

• Regular review cycles involving clinical, technical, and compliance stakeholders

• Centralised audit logs supporting internal review and regulatory inspection

• Clear escalation and decision-making authority for system changes

In Medirect, monitoring and governance were considered as ongoing operational concerns rather than one-time launch tasks. Model behaviour, data transformations, and system updates were fully traceable, enabling controlled evolution of the platform without compromising safety, transparency, or regulatory alignment.

Step 6. Integrate Into Clinical Workflows and Drive Adoption

Even a compliant and well-validated system will fail if it disrupts daily clinical routines. Adoption in healthcare depends less on model sophistication and more on how seamlessly the system fits into existing workflows. Poor integration increases cognitive load, slows decision-making, and ultimately leads to system avoidance.

For AI to deliver sustained value, it must be deployed as clinical decision support tools for doctors that respect time constraints, clinical context, and established patterns of care.

Workflow integration principles

• Embed AI outputs directly into existing clinical systems (e.g., EHR), not as standalone dashboards

• Present insights at the point of decision-making, not before or after

• Avoid duplicating data entry or forcing workflow changes

• Ensure low-latency responses suitable for time-sensitive scenarios

• Maintain clear separation between AI suggestions and clinical authority

Adoption and change enablement

• Role-specific views tailored to doctors, nurses, and administrators

• Training focused on practical use cases, not model mechanics

• Clear communication of system limitations and intended use

• Gradual exposure to AI-supported features to build trust

• Ongoing feedback channels for clinicians and operational staff

Signals of successful adoption

• Consistent voluntary engagement with AI-supported outputs

• Stable interpretation times that do not increase clinical workload

• Predictable override patterns aligned with clinical judgment

• Minimal workarounds or bypass behaviour by clinicians

In Medirect, AI-supported insights were embedded into existing clinical workflows rather than introduced as a separate interface. Role-based access ensured that each user group interacted only with relevant functionality, enabling adoption without disrupting established care processes or increasing cognitive burden.

Deep EHR and EMR integration is critical for adoption. Systems aligned with platforms like Epic or Cerner and built on FHIR standards enable clinicians to use AI insights directly within existing workflows without switching tools or disrupting care. For a deeper discussion of clinical documentation integration, see our detailed guide.

Assess the regulatory, data, and model-governance requirements of an AI clinical decision support system—engage experts to estimate architecture, timelines, and compliance effort.

Artificial Intelligence in clinical decision-making: advantages vs. disadvantages

Artificial intelligence is becoming more integrated into clinical workflows, aiding in diagnosis, risk assessment, treatment planning, and improving operational efficiency. However, the value of AI in healthcare is inseparable from its limitations. A realistic assessment requires weighing measurable clinical and operational benefits against safety, ethical, and governance risks.

Proper implementation of AI in clinical decision making can enhance clinical judgment. However, if implemented poorly, it may create new types of systemic risks that traditional medical devices did not pose.

Key advantages of AI-driven clinical decision support

| Advantage | Clinical and operational impact |

| Reduced diagnostic and prescription errors | AI systems flag abnormal lab results, imaging inconsistencies, allergies, and drug interactions, reducing the risk of adverse drug events |

| Improved care coordination | Real-time synchronization across physicians, nurses, pharmacists, and discharge workflows through EHR integration |

| Personalized care planning | Multimodal patient data enables tailored treatment plans and lifestyle recommendations |

| Cost reduction | Identification of duplicate tests and unnecessary procedures lowers direct and indirect care costs |

| Better institutional outcomes | Hospitals using mature healthcare CDSS development approaches report improvements in quality metrics, accreditation outcomes, and patient satisfaction scores |

These benefits are supported by multiple clinical reviews showing improvements in diagnostic accuracy, workflow efficiency, and clinician productivity when AI-enhanced CDSS are deployed with proper oversight.

Adopting digital systems like AI clinical support often aligns with broader healthcare digital transformation, including CRM for patient engagement. For insights on healthcare CRM benefits, see our analysis of the Top 12 Benefits of CRM Development for the Healthcare Industry.

Key Disadvantages of AI-driven clinical decision support

| Risk category | Clinical and operational impact |

| Algorithmic bias | Models trained on incomplete or non-representative datasets may underperform for certain patient groups, leading to unequal care quality |

| Model drift | Changes in patient populations or clinical practices can degrade model performance over time |

| Limited explainability | Lack of transparency makes it difficult for clinicians to validate or justify AI-supported outputs |

| Overreliance on AI outputs | Excessive trust in AI suggestions may weaken clinical judgment in complex or atypical cases |

| Accountability ambiguity | Responsibility for adverse outcomes becomes unclear when AI-supported insights influence decisions |

| Data privacy exposure | Aggregation of sensitive health data increases the impact of security incidents and regulatory non-compliance |

| Adversarial manipulation | Manipulated inputs can cause unsafe or misleading outputs without obvious system failures |

The advantages and risks outlined above illustrate a simple reality: AI-driven clinical decision support creates value only when benefits and limitations are addressed together. Improved outcomes, efficiency, and care quality are achievable, but they depend on deliberate design choices and ongoing governance.

When organizations build clinical decision support system initiatives with explicit attention to data quality, explainability, and human oversight, AI becomes a reliable clinical support layer rather than a source of hidden operational or regulatory risk.

What capabilities and system types define modern AI clinical decision support?

Modern AI-CDSS are defined not by individual features, but by how multiple capabilities work together within clinical workflows and regulatory constraints. In mature implementations delivered through healthtech software development services, these capabilities typically include:

1. Real-time clinical insights. Point-of-care guidance that highlights contraindications during order entry and supports differential diagnosis based on symptoms, lab results, and patient history.

2. Predictive analytics for diagnosis and treatment. Models that analyse historical and longitudinal data to estimate risks such as readmission, disease progression, or post-operative complications, enabling early intervention.

3. Medication safety and drug interaction alerts. Automated checks against comorbidities, allergies, lab values, and drug–drug interactions to reduce adverse drug events.

4. Deep EHR / EMR integration. Context-aware decision support embedded directly into systems like Epic or Cerner, using FHIR standards to ensure interoperability and traceability in line with regulatory requirements for clinical decision support.

5. NLP-driven clinical note analysis. Extraction of risk factors, comorbidities, and inconsistencies from unstructured data such as progress notes, discharge summaries, and pathology reports.

6. Multimodal and genomic data integration. Combined analysis of imaging, laboratory data, wearables, and genomic markers to support precision medicine and personalised treatment pathways.

7. Automated compliance monitoring. Continuous review of documentation, workflows, and deviations to support the operation of a HIPAA compliant clinical decision support system and reduce audit overhead.

Common system types used in clinical decision support

AI support systems vary in capability, architectural approach, and intended purpose.

In practice, healthcare organizations utilize a combination of the following types:

• Knowledge-based CDSS. Rule-driven systems based on clinical guidelines and “if–then” logic are commonly used for allergy checks, drug–drug interaction alerts, and protocol enforcement.

• Non-knowledge-based (ML-driven) CDSS. Systems that rely on machine learning and pattern recognition, adapting as new data becomes available rather than following static rule sets.

• Diagnostic decision support systems. Tools that assist clinicians by analysing symptoms, imaging, or laboratory results to suggest possible diagnoses.

• Therapeutic decision support systems. Systems focused on treatment planning and care pathway recommendations, particularly in oncology, cardiology, and chronic disease management.

• Administrative decision support systems. Platforms optimising scheduling, staffing, capacity planning, and cost control rather than direct clinical decisions.

• Integrated CDSS. Decision support embedded directly into EHR environments, providing real-time, context-aware insights without disrupting workflows.

• Stand-alone CDSS. Isolated systems are typically used in specialised clinics or outpatient settings where full EHR integration is limited.

• Patient-oriented CDSS. Patient-facing tools delivering personalised guidance, reminders, and coaching are commonly used in chronic disease management and remote monitoring.

Across all system types, alignment with FDA guidelines for AI medical software and equivalent regulatory frameworks determines whether solutions can scale safely beyond pilot deployments.

The capabilities and system types outlined above show that building an effective AI-driven CDSS is not about isolated features, but about architectural coherence, regulatory alignment, and clinical workflow fit.

Successful clinical decision support system implementation requires experience in regulated healthcare environments, disciplined engineering practices, and a clear understanding of clinical responsibility boundaries. This is where the choice of technology partner becomes critical.

Why build clinical decision support system with Computools?

Building AI-driven clinical decision support requires more than clinical logic or model accuracy. It needs systems that remain reliable under regulatory scrutiny, evolving medical standards, and real-world clinical pressure. Computools approaches healthcare software engineering with this long-term operational reality in mind, backed by a delivery organisation of 250+ engineers and 400+ completed projects across regulated industries.

We help organizations build clinical decision support system that is HIPAA and FDA compliant by treating compliance, governance, and architecture as first-class engineering concerns.

Across healthcare engagements, this approach has contributed to 40% reductions in administrative overhead and 45% faster patient data processing, driven by compliant automation and workflow optimisation.

Our teams combine healthcare domain expertise with disciplined software architecture development, ensuring that CDSS platforms remain stable as data volumes grow, models evolve, and clinical workflows change. Modular, auditable system design enables explainability, human oversight, and controlled model updates capabilities required for production deployment in regulated clinical environments.

Beyond delivery, Computools supports long-term operational maturity through dedicated AI governance services that cover model lifecycle management, risk controls, documentation, and post-market monitoring. This governance-first approach enables CDSS solutions to move beyond pilots and scale safely across departments and facilities.

With experience spanning healthcare, finance, and other risk-driven domains, we engineer clinical decision support platforms that balance innovation with accountability, supporting clinicians at scale without compromising regulatory compliance or clinical authority.

Planning an AI-driven clinical decision support initiative? Reach out to discuss requirements, compliance constraints, and implementation considerations at info@computools.com.

As organizations evaluate partners for complex clinical AI initiatives, it’s helpful to understand the broader landscape of specialists. See our overview of the Top 25 Healthtech Software Development Companies in 2026 for a comparison of approaches and capabilities across the industry.

To sum up

AI clinical decision support has moved beyond experimentation and into the core of modern healthcare delivery. However, clinical value and regulatory safety are inseparable. Systems that fail to define scope, governance, explainability, and human oversight from the outset inevitably stall at the pilot stage or introduce long-term compliance risk.

Organizations that succeed treat AI as a controlled clinical support layer, not an autonomous decision-maker. When data governance, validation, workflow integration, and continuous monitoring are engineered together, machine learning clinical decision support strengthens clinical judgment, improves operational efficiency, and scales safely within regulated healthcare environments.

Computools

Software Solutions

Computools is a digital consulting and software development company that delivers innovative solutions to help businesses unlock tomorrow.

“Computools was selected through an RFP process. They were shortlisted and selected from between 5 other suppliers. Computools has worked thoroughly and timely to solve all security issues and launch as agreed. Their expertise is impressive.”