HIPAA compliant AI healthcare architecture has become a business-critical requirement as healthcare organizations face unprecedented exposure to data breaches, regulatory penalties, and escalating cyber risk.

Between 2009 and the end of 2024, U.S. healthcare entities reported 6,759 large data breaches, resulting in the exposure or unauthorized disclosure of more than 846 million individuals’ records.

In 2024 alone, more than 276 million records were compromised, driven mainly by ransomware and large-scale hacking incidents.

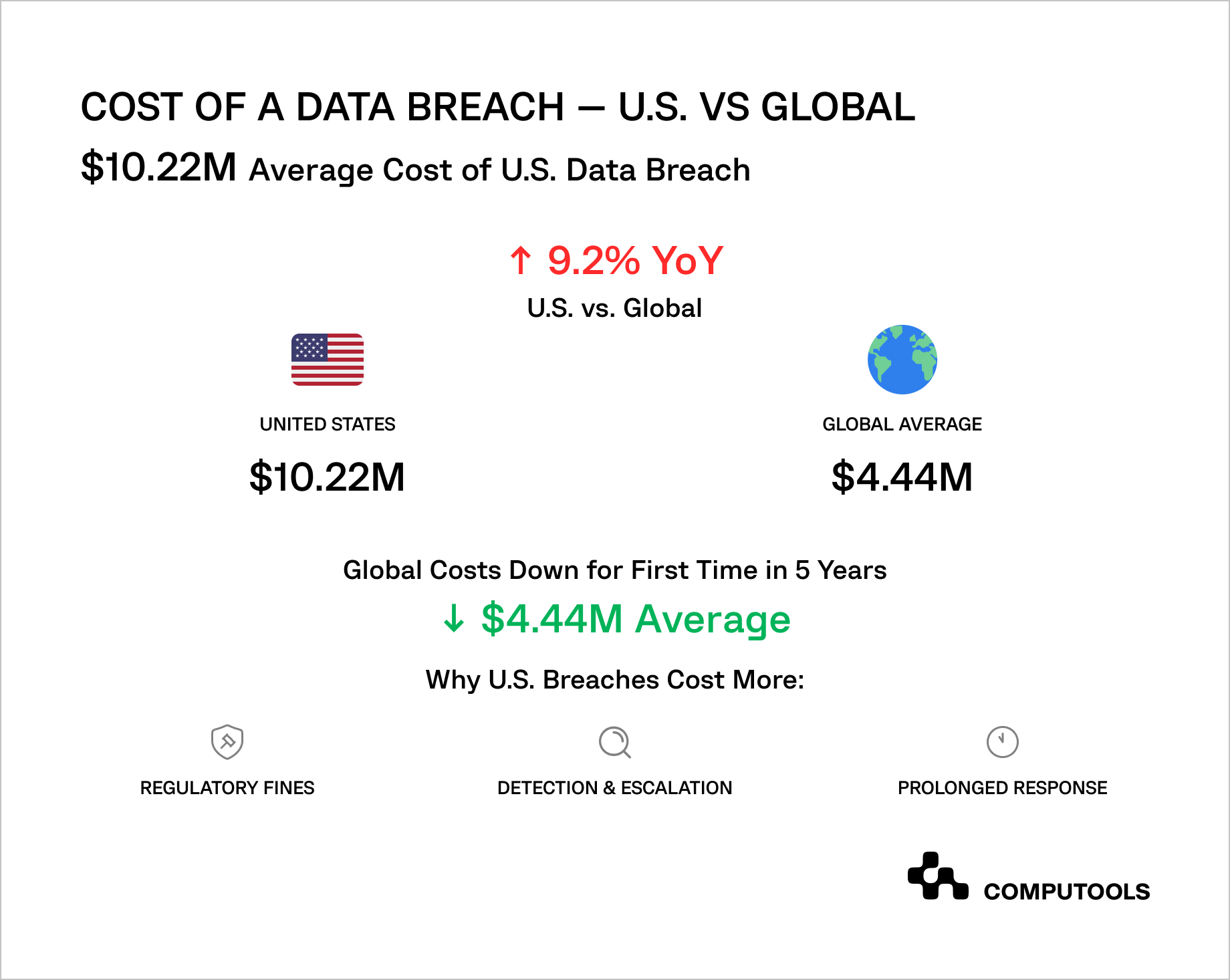

Financial impact is rising in parallel: according to IBM’s 2025 Cost of a Data Breach Report, the average cost of a U.S. data breach reached a record $10.22 million, up 9.2% year over year, with U.S. costs remaining elevated amid regulatory and incident-response drivers.

For healthcare providers, payers, and digital health vendors adopting AI, this creates a clear and immediate risk: AI systems expand the attack surface through new data pipelines, automated processing of PHI, and third-party model dependencies, meaning that a single architectural mistake can rapidly escalate into a multi-million-dollar compliance failure, prolonged OCR investigations, operational disruption, and irreversible loss of patient trust.

How we applied AI architecture principles in a healthcare system

As medical organizations scale, disconnected data environments create blind spots in documentation, reporting, and operational decision-making. Without a unified data foundation, efficiency gains become increasingly difficult to achieve as patient volumes rise.

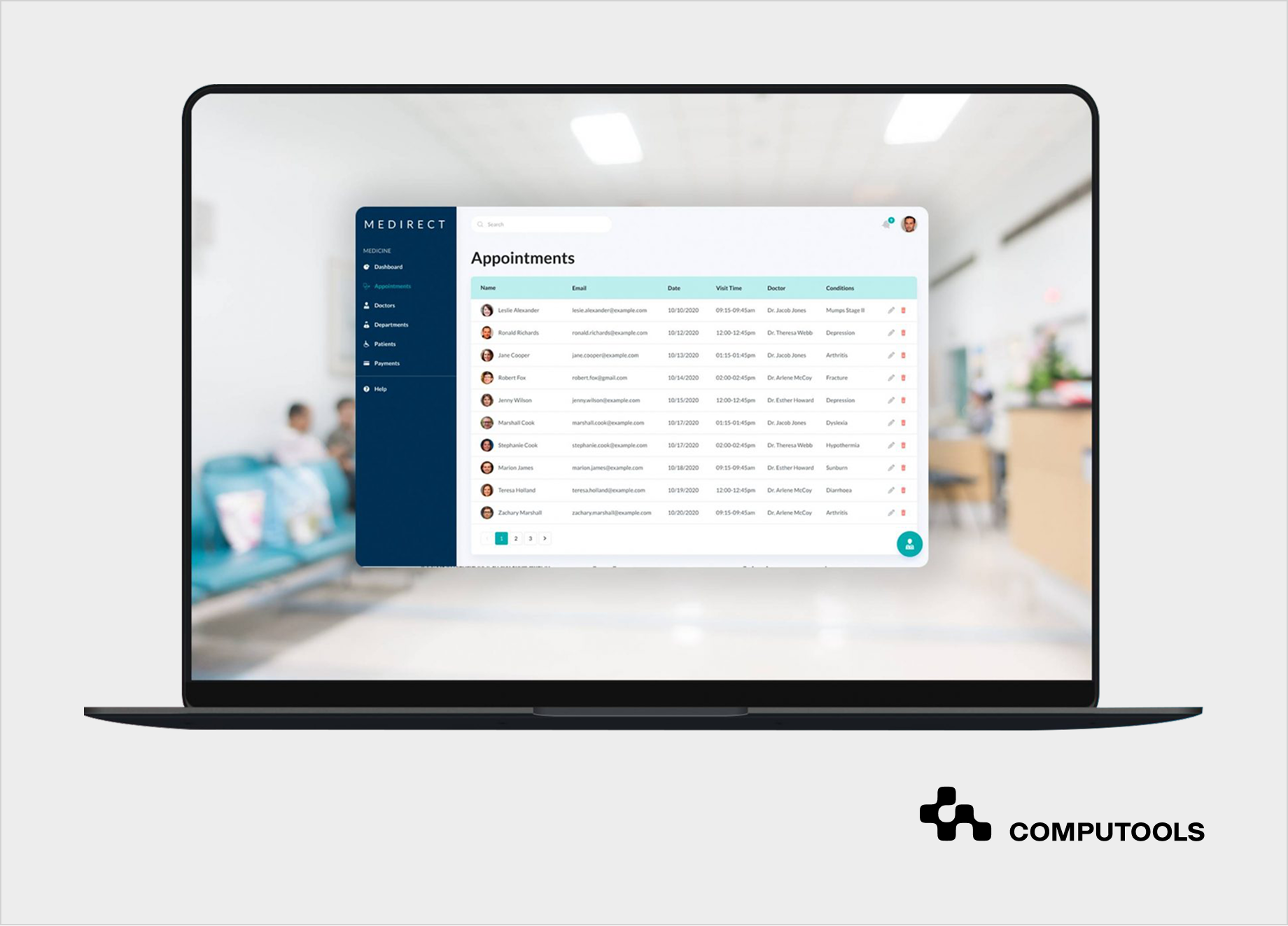

This was the challenge Medirect, a healthcare startup focused on operational efficiency for medical facilities, faced.

Medirect operated in an environment where patient data was distributed across multiple disconnected systems, each applying its own rules for calculations and documentation. This led to fragmented medical records, limited visibility into patient histories, and growing operational risk as patient volumes increased.

Clinicians and administrators were forced to work with incomplete or delayed information, slowing documentation and complicating coordination across facilities.

The objective was to redesign the platform around a single, reliable source of truth for patient records and clinical documentation. This required consolidating data ingestion, aligning calculation logic across workflows, and defining clear access boundaries for medical and administrative roles as part of a broader software architecture development effort.

We delivered a secure, centralized platform that replaced fragmented local processing with standardized data handling and documentation workflows. The result was a stable foundation for AI architecture for healthcare applications, enabling future intelligence layers without disrupting care delivery, while also supporting scalable hospital software development services aligned with real clinical operations.

A 10-step guide: how to design HIPAA-compliant AI architecture for healthcare applications with a real-world case

Based on more than a decade of hands-on experience delivering regulated healthcare platforms, we break down how to design HIPAA-compliant AI architecture step by step, focusing on real architectural decisions, compliance trade-offs, and implementation patterns validated in production environments.

Step 1: Define System Scope, Risk Level, and Decision Authority

Designing a HIPAA-compliant AI system starts with explicitly defining what the system is allowed to do and what it is not. In healthcare, this distinction is critical because regulatory exposure grows not with technical complexity, but with decision authority and the scope of healthcare AI compliance requirements the system must meet.

Teams must clearly establish whether the AI system supports administrative workflows, clinical documentation, analytics, or decision support. The moment an AI output directly influences clinical decisions, the compliance profile of the entire system changes. This is why scope definition must include explicit guardrails around automation, human oversight, and responsibility boundaries.

Equally important is assigning ownership. Every HIPAA-relevant AI system must have clear accountability for data, models, compliance decisions, and incident response. Without this, architectural decisions later in the process become fragmented and reactive, often leading to expensive, brittle, last-minute compliance fixes.

Medirect deliberately scoped its platform around centralized patient management and clinical documentation. From the outset, the system was designed to support healthcare staff rather than replace clinical judgment, thereby keeping the architecture within a manageable regulatory boundary.

Regulatory and architectural decisions define how healthcare systems evolve long before deployment. How to Build a Telehealth Platform for Hospitals and Outpatient Clinics explains how these constraints shape virtual care infrastructure and data flows.

Step 2: Map Data Flows and Identify PHI Touchpoints

After system scope and decision authority are defined, the next architectural priority is to gain absolute clarity on how data moves through the system. In healthcare AI, compliance failures rarely arise from incorrect data storage. They occur because teams do not fully understand where data flows, how it changes, and which components interact with it.

At this stage, the goal is to map the end-to-end lifecycle of patient data, from ingestion through processing, storage, analysis, and output. This includes primary records such as patient profiles, medical histories, and prescriptions, as well as derived artifacts: calculated metrics, intermediate datasets, logs, cached responses, and analytical outputs.

Each of these elements can still qualify as PHI depending on how easily it can be linked back to an individual. A proper data flow analysis forces teams to identify every point where sensitive data enters the system and to evaluate whether that exposure is necessary, controlled, and auditable.

This is the foundation of PHI compliant AI data processing, because compliance cannot be enforced selectively; it must apply consistently across every system boundary, integration, and internal workflow.

This step also surfaces non-obvious risk zones. Monitoring tools, background jobs, and error-handling mechanisms often process real patient data without being designed for regulated workloads. If these paths are not explicitly identified and secured, they become blind spots during audits and incident investigations.

In Medirect, centralization replaced fragmented local modules that processed patient metrics independently. This fragmentation made it difficult to trace how medical data was handled across the system. By unifying data flows into a centralized platform, the team clearly identified PHI touchpoints, standardized processing logic, and eliminated uncontrolled data paths that had previously introduced operational and compliance risks.

AI delivers value only when it fits existing clinical workflows and accountability models. How to Integrate AI Diagnostics Into Existing Hospital Workflows examines how hospitals introduce AI without disrupting care delivery.

Step 3: Design Privacy-First and Secure Data Pipelines

Once data flows and PHI touchpoints are fully mapped, the architecture must move from visibility to enforcement. Now, the key is how securely data is processed at each stage, not where it travels. In regulated healthcare, security must be integrated into the data pipeline, not just as a perimeter concern.

A compliant AI system must assume that data will be continuously ingested, transformed, and analyzed. This makes pipelines a primary risk surface. Each transformation step—normalization, aggregation, feature extraction, and analytics—creates new data artifacts that may still contain sensitive information. Designing a secure pipeline, therefore, requires enforcing protection not only at storage boundaries but also throughout the data-in-motion lifecycle.

Encryption plays a foundational role here, but compliance depends on consistency rather than individual controls. Data must remain protected during ingestion, processing, intermediate storage, and output generation, without exceptions for internal services or trusted components.

This is why teams must architect an encrypted AI data pipeline for healthcare that applies encryption, access validation, and logging uniformly across all stages, including internal service-to-service communication.

Just as important is preventing accidental exposure through operational mechanisms. Debug logs, analytics dashboards, performance monitoring tools, and error traces often become unintended leakage points when pipelines are not designed with privacy constraints in mind. A privacy-first pipeline treats these artifacts as regulated outputs, subject to the same controls as primary data stores.

By consolidating patient data processing into a centralized platform, Medirect eliminated multiple parallel data pipelines that previously handled sensitive information inconsistently. This allowed uniform encryption and access controls, reduced duplication of patient data across modules, and significantly lowered the risk of inadvertent exposure through auxiliary system components.

Step 4: Separate AI Processing from Clinical and Operational Systems

At this stage, many healthcare AI initiatives fail when intelligence components are too tightly coupled with operational systems. When AI logic is allowed to modify records, trigger workflows, or execute actions directly, the system becomes fragile, opaque, and complicated to defend during compliance reviews.

A compliant architecture requires a clear structural separation between AI processing and core clinical operations. AI services should operate as bounded analytical components that observe, evaluate, and generate recommendations but do not act autonomously. This separation ensures that clinical authority remains with healthcare professionals and that every AI-driven insight passes through explicit human validation before influencing care delivery or operational decisions.

From an architectural perspective, this means defining strict service boundaries and interaction contracts. AI components should expose well-defined interfaces for inference and analysis, while core systems retain control over state changes, approvals, and execution.

This pattern is fundamental to building a secure AI architecture for healthcare because it limits the blast radius of errors, model drift, or unexpected outputs, and prevents AI from becoming an uncontrolled actor within regulated workflows.

This separation also improves auditability. When AI outputs are consumed through controlled interfaces rather than embedded logic, it becomes possible to trace how insights were generated, reviewed, and applied. Regulators and internal compliance teams can verify what the AI produced and also how those outputs were used or deliberately not used within clinical processes.

Medirect preserved clear role-based workflows for doctors, nurses, and administrators while introducing centralized data analysis capabilities. AI-driven insights enhanced visibility and efficiency without bypassing established operational controls or decision authority, keeping the platform aligned with healthcare compliance expectations.

Step 5: Govern the AI Model Lifecycle from Training to Inference

After establishing architectural separation, focus must shift to how AI models are actually created, trained, updated, and deployed over time. In healthcare, the greatest risk is losing oversight of how models evolve once they go into production.

A compliant AI system must treat models as regulated assets with a clearly governed lifecycle. This includes controlling the source of training data, how it is prepared, which versions of data and code were used, and how model behavior can be reproduced months or years later. Without this discipline, organizations cannot reliably explain why a model produced a specific output, a critical requirement for audits, incident reviews, or legal inquiries.

Training and inference must be designed as traceable processes rather than opaque operations. Every prediction should be attributable to a specific model version, configuration, and input context. This is the foundation of a HIPAA compliant machine learning pipeline, where accountability is preserved not only for data access but also for model behavior across its entire lifecycle.

Equally important is ensuring that model development does not unintentionally expand the compliance surface. Training environments often generate extensive logs, metrics, and debugging artifacts that can capture sensitive information if not properly controlled. A compliant pipeline explicitly restricts what can be logged, stored, or exported, and enforces the same privacy constraints during experimentation as in production.

Model updates also require governance. Even small changes to features, parameters, or thresholds can alter system behavior and affect compliance risk. For this reason, healthcare AI systems must implement controlled model promotion from development to production, with documented validation, approval, and rollback processes. This ensures that improvement does not come at the cost of regulatory defensibility.

Medirect’s unified data model and standardized calculation logic created a stable foundation for analytics and future AI capabilities. By avoiding fragmented local logic, the platform enabled consistent behavior across environments and reduced the risk of untraceable variations in analytical outcomes.

Step 6: Deploy AI Within a Controlled and Compliant Infrastructure

After establishing model lifecycle governance, attention turns to the production deployment location and method. In healthcare, deployment choices are not merely technical infrastructure decisions; they significantly influence the organization’s risk management, audit preparedness, and incident response abilities.

A compliant deployment environment must be subject to continuous scrutiny. This means isolating environments by purpose, enforcing strict network boundaries, and ensuring that all components involved in data storage, processing, and inference operate under uniform security controls. Production systems handling PHI cannot share trust assumptions with development or experimentation environments, even if they run on the same underlying platform.

Cloud infrastructure can support these requirements only when compliance is intentionally designed into the architecture. This includes private networking, restricted ingress and egress, encryption enforced by default, and centralized identity and access management. More importantly, the IT infrastructure must provide verifiable evidence of control—logs, access histories, configuration states, and incident records that can withstand external review. This is the role of a HIPAA compliant cloud architecture healthcare approach, where scalability does not dilute governance.

Deployment also defines how the organization responds when something goes wrong. A compliant architecture anticipates failures by embedding monitoring, alerting, and incident response workflows directly into the infrastructure layer. Detection and escalation are not afterthoughts; they are operational capabilities that determine whether an organization can meet regulatory notification timelines and limit downstream impact.

Medirect leveraged Azure as a centralized infrastructure layer to support secure storage, controlled access, and scalable deployment. This allowed the platform to grow without introducing fragmented environments or inconsistent security postures across different system components.

Step 7: Enforce Access Control, Auditability, and Continuous Monitoring

At this point, the architecture may already be secure by design, but HIPAA compliance depends on proof. Healthcare organizations must be able to demonstrate who accessed sensitive data, when it occurred, and whether the access was appropriate. Without this visibility, even well-designed systems become indefensible during audits or investigations.

Access control must therefore be precise and enforce the principle of least privilege across all roles. Clinical staff, administrators, engineers, and support teams should interact with the system in fundamentally different ways, with permissions aligned to their responsibilities rather than convenience. These controls must apply consistently across user interfaces, APIs, background services, and operational tooling, not just the primary application.

Equally important is auditability. Every interaction with patient data, whether direct access, transformation, or AI-driven analysis, must be recorded in a tamper-resistant manner. Logs should capture context, not just events, enabling compliance teams to reconstruct what happened without relying on assumptions or incomplete records. This level of traceability is central to maintaining healthcare data privacy AI, because privacy involves not only limiting access but also regularly ensuring that these restrictions are upheld.

Monitoring completes this layer. A compliant system cannot rely just on periodic reviews; it must actively detect anomalies, unusual access patterns, and unexpected system behavior. Continuous monitoring allows organizations to respond early, contain incidents, and meet regulatory notification obligations before minor issues escalate into reportable breaches.

Medirect implemented tiered access for doctors, nurses, and administrators, ensuring that users could access only data relevant to their roles. This role-based structure simplified audit reviews and reduced unnecessary exposure to sensitive patient information across the platform.

Not all healthcare AI operates at the clinical decision level. AI Agents in Healthcare Industry: Smarter Automation for Better Patient Experience explores how organizations use AI for operational automation with lower regulatory exposure.

Step 8: Control AI Model Deployment, Updates, and Change Management

Even the most carefully designed healthcare AI system can become non-compliant if changes are introduced without control. In regulated environments, risk often arises during iterations when models are retrained, thresholds are adjusted, or new data sources are introduced under operational pressure.

A compliant architecture treats every model deployment as a regulated event rather than a routine technical update. This means that changes to models, configurations, or inference logic must follow a defined approval path, with documented rationale, validation evidence, and rollback procedures. Silent updates or continuous, ungoverned deployment pipelines undermine auditability and make it impossible to prove compliance after the fact.

From a regulatory standpoint, what matters is not only which model is running, but also when, why, and under what conditions it was promoted to production. This level of control is essential for HIPAA compliant AI model deployment, where organizations must demonstrate that no unreviewed changes altered how patient data was processed or how AI outputs were generated.

Change management also protects clinical trust. Healthcare professionals need confidence that AI behavior is stable and predictable. When updates are traceable and communicated, AI becomes a reliable component of the system rather than a moving target that clinicians hesitate to rely on.

Medirect’s platform architecture supported controlled evolution of the system, allowing enhancements and refinements without disrupting existing workflows or introducing untracked behavioral changes. This ensured operational continuity while maintaining compliance readiness.

Step 9: Validate Compliance, Security Posture, and Real-World Impact

By this stage, the architecture exists in production workflows. Validation, therefore, must extend beyond functional correctness to include regulatory defensibility and operational resilience. A compliant AI healthcare system should be able to withstand scrutiny from auditors, security teams, and clinical stakeholders alike using evidence, not explanations.

This is where MLOps for HIPAA compliance becomes critical. Validation is no longer a one-time activity performed before launch; it is a continuous operational process. Organizations must be able to demonstrate that models behave consistently over time, that data handling remains aligned with declared policies, and that any deviation, whether caused by data drift, configuration changes, or usage patterns, is detected and addressed promptly.

Effective validation requires more than technical testing. It includes confirming that audit logs remain complete and immutable, access controls function as intended, and monitoring systems provide a clear, explainable trail of model behavior in real clinical contexts. Without this operational layer, even well-designed architectures become fragile under regulatory review.

Equally important is validating impact. Compliance exists to protect patients while enabling safe innovation. Organizations must assess whether the system improves efficiency, reduces operational friction, and supports healthcare professionals without introducing new risk vectors. When compliance validation is paired with outcome measurement, AI initiatives shift from defensive investments to trusted, scalable platforms.

After launch, Medirect demonstrated measurable operational value, including a 15% increase in market share. This outcome validated not only the product concept but also the architecture’s ability to sustain compliance, stability, and performance under real-world conditions.

Step 10: Scale and Evolve Without Rebuilding the Compliance Foundation

The final test of a HIPAA-compliant AI architecture is scale. As new features, use cases, and integrations are introduced, the system should evolve without reopening foundational compliance questions. If each expansion requires architectural rework or regulatory reinterpretation, the original design was not sufficiently robust.

A mature architecture allows organizations to add capabilities while reusing existing controls, governance models, and audit mechanisms. New AI use cases should fit into established data flows, access models, and deployment processes rather than creating parallel paths that increase risk. This approach turns compliance from a constraint into a stabilizing force that enables growth.

At this point, execution quality matters as much as design quality. Sustaining compliance at scale requires disciplined delivery practices, cross-functional alignment, and long-term ownership. This is where experienced healthtech software development services become critical, ensuring that engineering velocity, regulatory rigor, and operational reliability remain aligned as the platform grows.

Medirect’s centralized architecture allowed the platform to evolve without structural changes to data handling or access control. This enabled the expansion of functionality while preserving the compliance and security posture established from the outset.

Translate HIPAA constraints into a secure, scalable AI architecture—request a technical review and project estimate tailored to your healthcare use case.

Technical architecture requirements for HIPAA-compliant AI systems

Designing a HIPAA compliant AI healthcare architecture involves a security-first mindset, where privacy, protection, and auditability are embedded in the system architecture from the very beginning.

In healthcare AI, compliance issues typically arise when security and privacy controls are introduced after core architectural decisions have already been made, or when those controls are implemented unevenly across system layers.

• Security-First Design Principles. HIPAA-compliant AI systems must be architected with security as a foundational design constraint, not an operational add-on. This means that protection mechanisms apply uniformly across data storage, processing pipelines, model training environments, inference services, and internal communication layers.

• Data at Rest Encryption. All PHI stored in the AI system must be encrypted using strong, industry-standard algorithms such as AES-256. This requirement applies to primary databases, training datasets, derived features, model artifacts, and intermediate processing files. Encryption keys must be managed separately from encrypted data, with regular rotation and strict access controls.

• Data-in-Transit Protection. All data transfers between system components must be protected using end-to-end encryption protocols such as TLS 1.3. This includes data flowing between ingestion services, training environments, inference servers, APIs, and client applications. Any unencrypted internal communication represents a compliance and breach risk.

• Zero-Trust Architecture. HIPAA-compliant AI platforms must assume that no internal component is inherently trusted. Zero-trust principles enforce strict authentication and authorization for every connection request, regardless of its origin. Network segmentation and micro-segmentation limit each service’s access to only what is required, significantly reducing the blast radius in the event of a compromise.

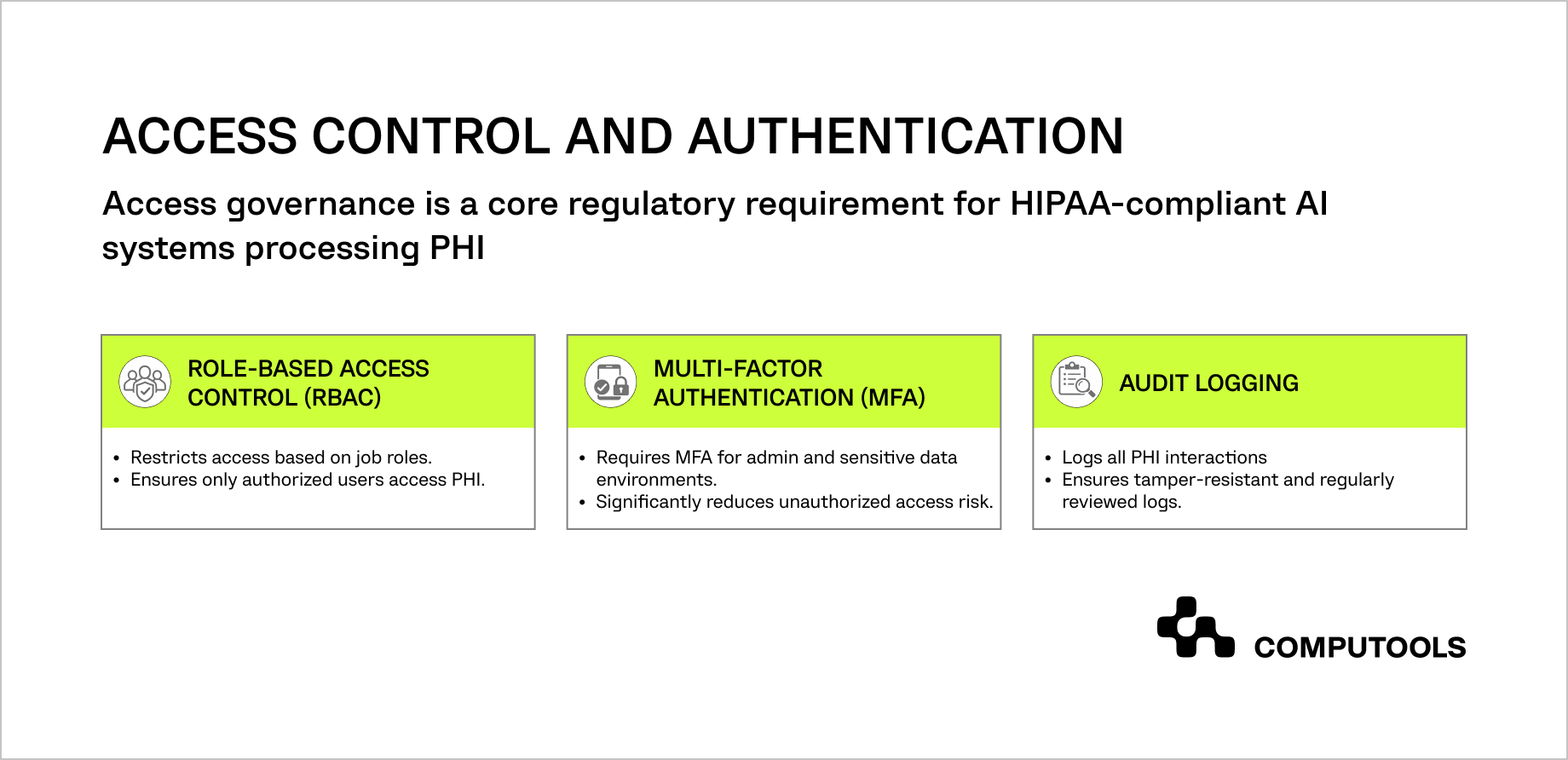

Access сontrol and authentication

Access control and authentication mechanisms define how regulatory intent is enforced in day-to-day system operations. In HIPAA-compliant AI architectures, access governance determines who can view PHI and who can influence model behavior, configuration, and downstream clinical workflows. Weak access boundaries often become the root cause of compliance failures during audits and breach investigations.

By combining role-based access, mandatory multi-factor authentication, and tamper-resistant audit logs, healthcare organizations create a verifiable control surface that supports both operational efficiency and regulatory defensibility.

What are core architectural principles for compliant AI

Principle 1: Privacy by Design

Privacy cannot be retrofitted after deployment. It must be enforced through architectural decisions that minimize exposure at every layer.

Key implementation patterns include:

• Data minimization: Limit PHI exposure during ingestion, feature engineering, model input, and output storage.

• Purpose limitation: Define explicit use cases for each AI capability and enforce technical controls that prevent unauthorized reuse.

• Retention policies: Implement automated data lifecycle management with enforced retention periods and secure deletion mechanisms.

Principle 2: Security in Depth

Layered defenses protect healthcare AI systems against diverse threat vectors and operational failures.

A compliant architecture typically includes:

• Network security: Segmented networks, firewalls, intrusion detection, and DDoS protection.

• Application security: Strong authentication, RBAC, input validation, and secure API design.

• Data security: Encryption at rest and in transit, tokenization of sensitive fields, and secure key management.

• Monitoring and response: Real-time anomaly detection, automated incident response, and regular penetration testing

This layered approach forms the foundation of a secure AI infrastructure for hospitals, where no single control is relied upon in isolation.

Principle 3: Auditability and Transparency

Healthcare AI systems must support investigation, validation, and regulatory verification at any time.

Audit architecture requirements include:

• Comprehensive logging of PHI access, model inferences, and system changes

• Write-once or cryptographically protected log storage to prevent tampering

• Long-term log retention aligned with regulatory timelines

• Reporting capabilities that support audits, investigations, and patient access requests

Auditability transforms compliance from a legal assumption into a verifiable system property. All these requirements represent established healthcare AI security best practices rather than experimental controls. They ensure that AI systems remain compliant not only at launch, but throughout continuous operation, scaling, and evolution.

When security, privacy, and auditability are treated as architectural fundamentals, healthcare organizations can deploy AI with confidence without sacrificing trust, safety, or regulatory defensibility.

Why organizations choose Computools to deliver HIPAA compliant AI healthcare architecture

Computools has 250+ experts and 12+ years of experience delivering custom healthcare and healthtech software for hospitals, clinics, pharmacies, and digital health startups. Our teams work at the core of AI, data, cloud, and compliance, helping healthcare organizations move from fragmented systems to secure, scalable, and interoperable platforms.

Our healthcare solutions deliver measurable operational impact:

• 45% faster patient data processing

• 40% reduction in administrative costs

• 50% faster digital healthcare workflows

• 35% lower operational expenses

• 25% increase in patient retention and up to 30% fewer appointment no-shows

Our healthcare domain expertise includes over 20 projects across healthcare and healthtech delivered worldwide.

Computools provides AI development services focused on secure data pipelines, explainable models, and production-ready deployment in regulated healthcare environments. We design AI systems that integrate safely with clinical workflows rather than operate as isolated experiments.

To ensure long-term regulatory resilience, we also offer AI governance services, helping organizations define decision authority, access control models, auditability requirements, and operational guardrails that regulators expect of HIPAA-aligned platforms.

For patient- and clinician-facing solutions, our mobile app development services extend compliant healthcare architectures into real-world use cases, including patient engagement, remote monitoring, appointment management, and care coordination, without increasing PHI exposure or security risk.

Combining healthcare expertise, proven metrics, and compliant architecture, Computools helps organizations build trusted, resilient, scalable AI healthcare platforms.

Ready to implement HIPAA compliant healthcare AI solutions that are secure, auditable, and aligned with real clinical workflows? Contact our healthcare engineering team at info@computools.com to discuss your requirements.

Conclusion

In healthcare, AI success depends on architecture: clear data boundaries, enforceable access control, and auditable operations from day one. When compliance, security, and decision authority are not defined early, risk accumulates as systems scale.

A disciplined approach to HIPAA compliant AI system design establishes clear boundaries for data use, access, and accountability. This allows healthcare organizations to deploy AI where it creates value without compromising regulatory integrity or operational control.

Computools

Software Solutions

Computools is an IT consulting and software development company that delivers innovative solutions to help businesses unlock tomorrow.

“Computools was selected through an RFP process. They were shortlisted and selected from between 5 other suppliers. Computools has worked thoroughly and timely to solve all security issues and launch as agreed. Their expertise is impressive.”